How to Search & Best Practices

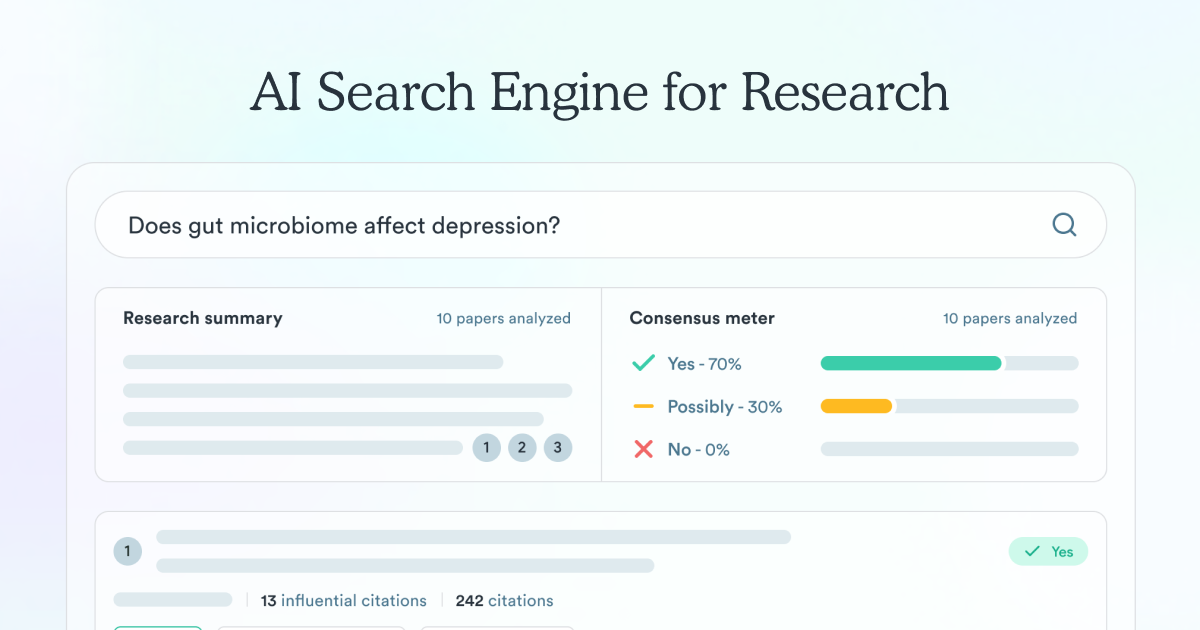

Have a question about science, health, fitness, or diet? Get cited, evidence-based insights with Consensus.

Try for freeHow to search with Consensus

There is no single ‘correct’ way to structure a Consensus query. However, always keep in mind that Consensus is a search engine – not a chatbot.

With every query, our purpose-built search engine will first attempt find the best and most relevant research papers to ground its response. This means that Consensus will perform best when there is a research question or research topic at the “heart” of your query.

The most basic tips to follow when using Consensus are:

- Focus on subject matter that is likely covered in academic research papers

- Enter your query as something that resembles a research question (more on this below)

In this article we will dive into some of the ways we have seen users get the most value form Consensus. Click on any of the links below to see what that search query looks like in Consensus!

Ask a yes/no question

Ask about the relationship between concepts

Copilot: Add a command to your search

Input an “open-ended phrase”

Two concepts separated by an “and”

Ask about “what is the best…”

Ask “how to” do something

Keyword searching

Long ‘chat-like’ commands for Copilot

Note: When using Consensus, we always start with a search of the literature to ground all of our responses in scientific studies. The longer the command the more challenging it will be to find relevant papers to cite in our response!

Focus on the right subject matter

Consensus only searches through peer-reviewed scientific research papers to find the most credible insights to your queries. But keep in mind this covers many areas!

Astronomy, Biology, Chemistry, Physics, Earth Science, Environmental Science, Oceanography, Materials Science, Computer Science, Mathematics, Statistics, Psychology, Sociology, Anthropology, Linguistics, Economics, Political Science, Geography, History of Science, Philosophy of Science, Biochemistry, Molecular Biology, Cellular Biology, Genetics, Neuroscience, Ecology, Meteorology, Geology, Paleontology, Zoology, Botany, Microbiology, Pharmacology, Biophysics, Quantum Mechanics, Relativity, Astrophysics, Organic Chemistry, Inorganic Chemistry, Analytical Chemistry, Physical Chemistry, Biotechnology, Information Technology, Software Engineering, Data Science, Artificial Intelligence, Robotics, Cognitive Science, Developmental Psychology, Clinical Psychology, Experimental Psychology, Industrial-Organizational Psychology, Social Psychology, Behavioral Science, Archaeology, Demography, Public Health, Nursing Science, Medicine, Veterinary Science, Dentistry, Agriculture, Forestry, Nutrition Science, Kinesiology, Environmental Health, Renewable Energy, Conservation Science, Space Science, Acoustics, Optics, Material Engineering, Civil Engineering, Mechanical Engineering, Electrical Engineering, Chemical Engineering, Aerospace Engineering, Nuclear Engineering, Systems Science, Cybernetics, Urban Studies, Science Education, Science Policy, Science Communication, Science and Technology Studies.

This is a small example of searches that have plenty of research papers to help answer them:

——

This page was created based on feedback from Consensus users.

Is there something we missed? Email us with your feedback!

Have a question about science, health, fitness, or diet? Get cited, evidence-based insights with Consensus.

Try for free